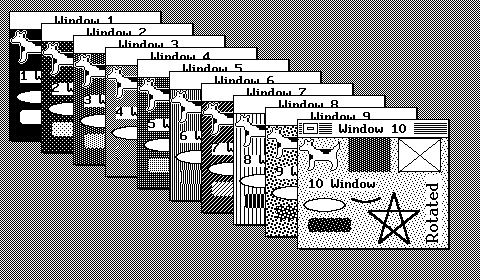

First implemented here (in 2021) as a Free Pascal library targeted at driving Waveshare's small e-Paper displays for Raspberry Pi. The above sample displays (from the included test program) demonstrate that you can utilize multiple overlapping display windows (if you want them), clipping to arbitrary shapes (ditto), multiple font styles, sizes, and rotation, full justification of text, large font sizes, a variety of geometric shapes, and bitmap scaling and orientation.

Clunkdraw in Pascal tarball: clunkdraw.tgz

Clunkdraw in C tarball: clunkdrawc.tgz

A demonstration of Conway's Game of Life written (in C) using Clunkdraw on a Raspberry Pi 3B+ driving a 320×240 Adafruit 2.2" TFT LCD 16-bit color display:

Acorn MovieJust how much faster does something like Clunkdraw need to be? (The source for this 12kB Life program, which can optionally (with the help of

ffmpeg) make the movie, is included in the package.)

Ah, Life. This one, running at around 140 generations/second on a 320×240 field, is greatly superior to the first computerized Life I ever ran, 45 years ago on my old 1802 Elf with 1kB of RAM: about 2.5 seconds/generation on a 64×32 field! Very sluggish1, it would have taken nearly four hours to run an acorn to ground, if an acorn could have survived to term on the smaller field. (It could not.) As quick as this is, compared to that old Elf, it's at least that far behind the current state of the art on home computers.

The 1984 introduction of the 68000-based Macintosh electrified the entire microcomputer industry, this company included. Proof that a modestly priced 16-bit system using a high level language (like C) was not only at least as powerful as the Z-80 and supported a much larger flat address space, dramatically easing programming, but was capable of supporting nearly any customer's written language using a graphics-only hardware platform, and was even capable of supporting a paradigm shift to a GUI environment.

(Apple's high list prices did not disuade the engineers at the Small Company, they were well aware of the very modest incremental component cost over a Z-80 solution. While Intel's extant 8086 CPU family also would support more memory than a Z-80, the Company was already highly experienced with the memory bank switching and code segmentation necessary to get around the Z80's 64KB limit, and had absolutely zero interest in jumping 'forward' to a 'solution' that still required all the same kind of software machinations in order to avoid the very same 64KB limit. Not Good Enough, and thank you for playing, Intel. Other reasonable candidates were offered by Zilog [Z8000, still with a marked 64KB odor], National Semiconductor [16032, late, slow and buggy, though elegant], and Western Electric [WE32100, obscure, but also elegant], but Motorola's offering was the most mature, and support software [OS, C compiler] was readily available, as were second sources of parts through Hitachi. Intel's own 386, their first product to actually compete effectively on an even footing with the 68000, was just becoming a potential choice at this time, but it was new, expensive, and deliberately single-sourced, all of which removed it from consideration. Also, its primary goal was compatibility [with a processor we weren't already using] rather than capability, and so it was much less interesting to the Company than other choices.)

The subsequent releases of comparable low-cost Atari and Amiga systems hammered home the proof, and commercial releases of Unix-based systems using the same 68000 processor family showed just how capable these systems could be. All of a sudden the Company had a clear direction in which to go, one that would be a significant improvement over the Z-80 product line, both in customer perception and in ease of development.

However, the first 68010-based next-generation systems the Company developed suffered from a lack of vision on the part of management. While the hardware was very good, as was the DNIX-based system software and the DIAB C compiler, the application layer software under consideration at the time was very pedestrian, and was based around a VT220 non-graphics 24×80 terminal model that was barely even capable of supporting European languages. (It didn't, but could have easily enough.) No Arabic, no Hangul, and no graphics.

A major problem was that while everybody involved could see the improved capability of the new product line's hardware base, they had essentially all spent that same performance dollar, resulting in severe under-performance in the final result. Crippling underperformance, in fact. It had been forgotten that the 68010 'advantage' had already been spent: on using C instead of assembly language. The 68010 had only twice the clock rate of the Z-80, and twice the bus width. Yes, it supported a lot more memory directly and thus eliminated bank-switching considerations, and virtual memory meant that the memory ceiling was very 'soft', and the CPU's more and larger registers cut down on all the unproductive data shuffling that generally plagued 8-bit processors, but testing had shown that when using C it wasn't really all that much faster than the Z-80 had been at running custom application code. And, the graphics-only video hardware of the new platform was decidedly more sluggish than the more expensive (and memory-thrifty, but far less flexible) dedicated-language video hardware of the Z-80 platforms, so even if there had been a performance surplus it was more than gone already. (The new architecture was expressly designed to improve development time, not application speed—application execution speed was basically never a problem on the old products and we were willing to cede some of it for improved development speed.) The design intent here was simple: write efficient applications the same way you did before, but 1) you get to use C instead of assembler, and 2) you don't have to waste a lot of time packing your code down to fit into a Procrustean 64kB memory bed.

(We're getting there, be patient!)

The prototypes were slow. Orders of magnitude (yes, more than one) too slow. It was common during development to push some menu keys and then go to lunch. Once you came back it might be ready to look at to see if it had done the right thing, but lunchtime wasn't always long enough. The best engineers in the company were thrown at the problem, and told to 'optimize' it. They tried, and made some substantial headway, improving its speed by 4×, but the starting point was so bad... The selected architecture was simply not practical on the platforms of the day.

The language vendor, when contacted, said "Don't do that, that language is meant only for rapid prototyping of database queries. [This was before the widespread availability of SQL.] Why aren't you accessing the database using the conventional API?" High-end engineering workstations, many times more expensive than any customer would consider purchasing for their bank tellers, underperformed by at least one order of magnitude when running the new code, even after optimization. The Company's own engineers unanimously said "this is bad and can't be fixed, we have to do something else." This all fell upon deaf ears.

Management hired performance consultants to show the Company's engineers the errors of their ways. The consultants tested the new platform, its hardware and system software, and ranked it higher than anything else in its class they had ever seen. Their report basically said: "Listen to your people, they're very good." No matter, management had somehow hitched its wagon to this particular 'solution', and that was that. The deaf ears became hostile ears: The engineers were told that anybody who persisted in nay-saying had better just shut up and fix the problem, or else seek employment elsewhere.

Of course, it was not just that management had chosen the wrong software architecture for the new product, if you don't make an occasional mistake you're not trying hard enough. The sin was that they were completely unwilling to admit that it had been a mistake, and to do something different while it was still possible to do so. The engineers could not solve the architectural problem, and the managers (many of them from a mainframe computing background and still vaguely resentful of the limitations of the microcomputers they found themselves stuck with) would not budge on the architecture. The Company's entire future was tied to this one decision; the managers were betting the farm, not that it was their farm to bet. And the months, eventually years, rolled by...

To recap: the new hardware and system software was best-in-class, and the system had enough memory and performance to rapidly deploy new procedural applications written in C. But all that was available to the application for user I/O was a lackluster VT-220 subset terminal emulation. This was no better than what the old Z-80 systems could offer, and in fact was worse because it was really quite sluggish in comparison. (A Z-80 could drive, using assembly language, a memory-mapped hardware ASCII video subsystem extremely fast. Video responsiveness of all the old systems was unsurpassed in the industry.) The new video hardware had met its design targets of supporting the written language of any customer, and supporting facsimile (signatures, checks, etc.) graphics, all at a modest cost, but there was no system or application software to support this, only the terminal emulator, and the innate performance of the display hardware was decidedly marginal as it required system software to do all the character generation rather than using custom hardware dedicated to that purpose.

We needed something that looked better, a lot better, to draw attention away from the fact that applications on the new platform felt noticeably slower than on the old. We needed something that could better support languages, and facsimile graphics, than the terminal emulation or the old products. Emphasis on what you've gained compared to the old, not on what you've lost. Something dazzling enough to help explain away the delay in providing the new product, since we weren't about to make public the real reason we were late.

The X Window System existed by then, but all known implementations themselves consumed memory in excess of the total available on the target system, leaving none for the application or the operating system, and only had been seen working on much faster hardware than the target system, so it was of no real interest or use.

(And we're here, finally.)

The alternative product was demonstrable (to customers) within three months, and was a roaring success. (Did we mention that the engineering staff really was quite good?) Some heads did end up rolling regarding the database debacle, as one might expect. Unfortunately the Empress database itself also got caught up in the purge, although it was not at fault and was itself a good performer. We had paid a million dollars for it and its M-Builder query prototyping language that we'd mis-applied as an application environment, and we ended up throwing it all away. We eventually wrote our own application-specific database, but we'd probably have been better served by using the one we'd already bought. But, feelings were running pretty high at that point, and the torches and pitchforks were out...

Eventually Clunkdraw gained a network-independent client/server layer, vaguely similar to what X Windows offers but with multicasting abilities, which dramatically improved what could be done with the system, especially in training, supervisory, and remote debugging roles. But the imaging model remained that of Quickdraw. We also ported Clunkdraw, in C and assembler, and the top-notch DIAB C compiler itself, to the TI 34010 graphics CPU for use in our later color products. At some point gateway programs to both Windows 3.1 and Macintosh systems were created, allowing them access to our financial applications. (The on-the-fly translation from Clunkdraw to true Quickdraw was particularly easy, as you can imagine, but the network connectivity was much more difficult, as we did not use TCP/IP but rather an Ethernet form of X.25 networking, and the target Macs didn't even use Ethernet.) Eventually there were many man-years invested in this code base, and it was generally highly satisfactory within its market niche.

ISC's late-70's fonts for the Z-80 products were originally laid out on graph paper and hand-coded in EPROMs (for hardware ASCII video cards), as was customary for the time. Naturally this was a real pain, and in the early 80's I wrote an interactive 'chargen' program for developing newer character generator EPROMs (including double-wides) using BDS C on one of our CP/M S-100 development stations, driving a Microangelo graphics card. The dramatically easier tool flow encouraged spending more time on designing better-looking characters, which we used to good advantage in the final Z-80 products.The first font for the new 68010 hardware platform was embedded in its EPROM firmware, as part of a RAM-less (except for the character display itself) diagnostic monitor program emulating an extremely dumb terminal: the Lear-Siegler ADM-3, one of which we had in our lab and which was connected to the very first prototype of the new hardware, and which echoed the first cries of the newborn hardware over its serial port. The all-assembly monitor program was integral to bringing up the new hardware design, and by not using RAM, and keeping all internal state in the 68010's generously-sized internal register bank, the program was very useful in bringing up the initial harware, even when the RAM was not behaving correctly, and proving that the graphics-only platform could indeed display a page of text satisfactorily. (There was much internal interest in proving that this radical architectural departure was viable, as you might imagine. A single engineer, your author, was responsible for the workstation's hardware design [including its custom MMU], the POST and monitor program, and all the graphical code, including the later Clunkdraw.)

The new product's initial terminal emulator, buried in the operating system's kernel, used a font derived from the one in the monitor program. Once through the boot process the new product was visually indistinguishable from a VT220, many of which were in use at that time for the company's internal business operations. (Talking through LAT terminal concentrators over original Ethernet to a single VAX-11/780 running VMS and the ALL-IN-1 office applications suite.) The workstation's boot process was graphical, in an unsuccessful attempt to shame the rest of the developers into treating the hardware as more than a VT220.

In the late 80's Clunkdraw's ISC fonts all came out of this genesis, using newer GUI-based versions of

chargenseeded with the extant hardware fonts. The Clunkdraw GUI, fonts, andchargenall evolved together, bootstrapping each other and allowing abandonment of the CP/M-based tools once far enough along. Clunkdraw's Macintosh font support was added later, to take advantage of the massive library of larger and more decorative fonts available there. Performance-sensitive applications, however, stuck with the ISC fonts. They were somewhat less space-efficient than their cousins, but required less shifting and masking to display, which translated directly into speed. (This last only applies to the original Clunkdraw assembly-language implementations.)

I eventually found myself again working for the same man that had founded the Small Company. I was looking at using a small monochrome e-Paper display, because of its unequalled strengths in the ambient lighting department. Unfortunately the demo library that came with the display kind of sucked, especially the text, and I found myself waxing nostalgic for the simplicity, capability, and familiarity of Clunkdraw. (Especially as regards good-looking large-scale text.) The e-Paper display did not integrate into the host Raspberry Pi's GUI system, you are intended to drive it as a pure peripheral, using an I/O library of your own. (Its use model resembles printing on paper: think flash memory rather than RAM, so the standard display channels aren't a good fit for the hardware.)

And then the light went on: Why not resurrect Clunkdraw? At least partially? The environment I needed was Pascal, but Quickdraw itself was clearly very happy in Pascal, since it started out there, and porting from basic C to basic Pascal is a near-trivial mechanical exercise. The RPi is very fast, avoiding assembly language probably isn't a hardship now, and the target display was not large. And I only needed a couple of calls and one or two of the nicer-looking fonts... all the familiar old arguments. And so the die was thrown. All I needed to do was a mechanical translation of C to Pascal for the few parts I needed, and to write some naive pixel-plotting code to replace the elaborate optimized assembly language of original Clunkdraw, and I'm done. It looked like only a few days would be required for what I needed in the new product, and the results would be infinitely better-looking and more familiar than what I'd get continuing on with that wretched e-Paper demo library...

It was so much fun and worked so well that I got carried away, and ended up porting everything in the monochrome imaging layer over the course of a month. (Not the networking layer nor the color code, which were themselves substantial and not a good fit to the new environment.) The new drawing code itself is very naive, and an extremely poor performer when compared to original Clunkdraw. But, the RPi is so fast and our needs were so modest that it simply doesn't matter here.

No, it's not C, and it's certainly not C++. It's a near-trivial exercise to port it to C, though. It is probably a nearly trivial exercise to port it to any procedural language environment.

.a library

(remember this predated the advent of shared object libraries, and

certainly predated Linux) so that a client application needn't contain

any code it wasn't using. Pascal does not really support this model.

Rather, it prefers larger-grained units. Clunkdraw in Pascal

has been split into nine units, mostly just to make it easier to work

on.

Also, original Clunkdraw stored all its fonts on disk, in part because the sum of all its fonts occupied a substantial portion of a client's address space, but this definitely introduced an unwelcome external dependency. The RPi supports much larger address spaces, so this implementation puts all but one of the fonts in an optional unit, a large one, but one which can be avoided if you don't need the fonts. Clunkdraw in Pascal relies on nothing outside of itself, and adds no additional dependencies at either compile or run time.

Because it doesn't look like Pascal supports the same concept as C's function pointers, which allows for late binding, the number of units is relatively small, and things are packaged together. Unit X refers to Y, which in turn refers to Z, and you end up including a bunch of the units as often as not. This could maybe be done better by an expert, which I am not. But, this is good enough for now.

There are nine units, plus test programs and etc.:

The size of this library is no problem at all for a Raspberry Pi, or something similar, but it's far too big for use on an Arduino. A fine-grained

Source

SizeObject

SizeFile Contains 105,568 16,001 epaper.pas E-Paper SPI access 155,901 58,776 clunkdraw.pas Lines; Rectangles; Region clipping, drawing; Support 5,342,618 1,087,081 clunkfonts.pas All but one font 30,367 8,724 clunkpoly.pas Polygons 38,358 11,940 clunkregions.pas Region manipulation 33,800 7,994 clunkrounds.pas Ovals; Arcs and Wedges; Rounded Rectangles 6,805 2,305 clunksave.pas MacPaint and PBM file saving (for screen shots) 70,359 25,802 clunktext.pas Text drawing; Default font 91,510 21,396 clunkwinds.pas Overlapping windows

.a C library of Clunkdraw might be

useful on an Arduino, if you were not using very many of the Clunkdraw

features. I have considered porting Clunkdraw again, back to

C for this purpose, but so far have not done so...

I needed to do some drawing on an overlay (over a video feed) on a small RPi RGB565 screen, and my thoughts turned again to Clunkdraw. Its drawing model was never strictly monochrome, and given the naive low-level everything's-a-pixel implementation it wasn't even difficult to extend it to color. But, I needed it in C this time. I first did a quick port of what I needed from the Pascal files, which worked but did not please me. Clunkdraw deserved better, and what of the poor memory-limited Arduinae that might wish to avail themselves of it...

Well, in C I do know how to chop things up finely.

For an embedded, dedicated solution a shared library offers no

advantages whatsoever, and has the significant disadvantage

of always having all the code resident, whether you need it or not.

It also requires at least a rudimentary operating system on the target

device, which isn't usually a feature of small embedded systems. But

as a .a library, non-shared, any given client,

on any kind of platform, can limit itself to only what it needs from

the library. (Case in point: a "Hello, world!" GUI application weighs

in at just under 10kB, font included. Any Arduino would be quite

happy to host such a modest client.)

I grabbed the Pascal sources and had at it, putting each function into

its own source file as I converted. There are currently 614 source

code files, including fonts. This translates into 614 members in

the clunkdraw.a archive.

If you include the library in your final compile, the linker will extract only what you need from the library.

Of course, without some degree of care you'll still get most all of it, especially the fonts. That's because Clunkdraw, by itself, doesn't know which of the available fonts or features you're not using. The parent QuickDraw drawing model doesn't require you to declare up front what features you're going to be using, and so in a sense it has to be prepared for anything. That tends to bring in all the code, hampering us when we're trying to be small.cc testhello.c clunkdraw.a

C's function pointers, which allow for late binding, allows some degree of run-time insulation in the inter-module references. This means that, with care, you can avoid including large chunks of Clunkdraw that you don't want.

If you don't want the font scaler, don't call InitFonts().

If you don't want the windowing system, don't

call InitWindows() or any of the other windowing

subroutines. In fact, if you draw anything other than rectangles you

might be surprised at how much of the library you'll get. But, all

is not lost.

If size matters, you're going to want to get familiar with the link map your compiler can make. For gcc, you get the map via:

Examine thegcc ... -Xlinker -Map=file.map

.map file to see what gcc has taken from the

library. The results may surprise you.

There are three general techniques for reducing library code inclusion in these circumstances:

const FontTableEntry EmbeddedFonts[] = {

{ 0, 12, &LaCenter12_Font},

{ 1, 12, &Spangle12_Font},

{ 2, 18, &Pullman18_Font},

...

{ 0, 0, 0}

};

and place that in your program somewhere. The linker will not pull

the default array out of the library, and so will not pull the

un-referenced fonts either.

So, supply a dummy _AddStyle() routine in your own code

somewhere that'll be resident in the executable before the link with

clunkdraw.a:

void

_AddStyle() /* Saves 2kB */

{

}

On ARM Cortex this occupies 4 bytes instead of the usual ~2kB. If

you mistakenly call for algorithmic styling with this in place, you

naturally won't get any styling.

srcCopy

or notSrcCopy modes, you can disable

the _DrawIt() routine by providing:

void

_DrawIt() /* Saves 2.7 kB */

{

}

(If you do this you don't need to also disable _AddStyle(), as

_DrawIt() is what calls _AddStyle().)

Having done this, if you mistakenly try to use any of these features

nothing will be drawn.

void

_DrawISCChar() /* Saves 1.5kB */

{

}

or:

void

_DrawMacChar() /* Saves 1.5kB */

{

}

The default font is an ISC font, FYI. If you mistakenly try to draw

with a disabled font format, nothing will be drawn.

const FontRec LaCenter12_Font = { /* Saves 2.6kB */

0xFFFF;

};

You don't need to do this if you're not drawing or measuring text,

because the fonts wouldn't have been included anyway. Of course,

you've got to have included whatever fonts you do want to

use, as mentioned above.

_MoveTo() instead of MoveTo().

Know that the angled line drawing

that MoveTo/LineTo can do pulls in quite a

bit of the library, due to how it handles angled lines and pen

sizes using polygons.

If you stick to basic text and rectangles you'll be well-poised to keep the library footprint small. For a small embedded-device control panel you might not need anything more.